Kubernetes的管理层服务包括kube-scheduler和kube-controller-manager。kube-scheduer和kube-controller-manager使用一主多从的高可用方案,在同一时刻只允许一个服务处理具体的任务。Kubernetes中实现了一套简单的选主逻辑,依赖Etcd实现scheduler和controller-manager的选主功能。

如果scheduler和controller-manager在启动的时候设置了--leader-elect=true参数,启动后将通过竞争选举机制产生一个 leader 节点,只有在获取leader节点身份后才可以执行具体的业务逻辑。它们分别会在Etcd中创建kube-scheduler和kube-controller-manager的endpoint,endpoint的信息中记录了当前的leader节点信息,以及记录的上次更新时间。leader节点会定期更新endpoint的信息,维护自己的leader身份。每个从节点的服务都会定期检查endpoint的信息,如果endpoint的信息在时间范围内没有更新,它们会尝试更新自己为leader节点。scheduler服务以及controller-manager服务之间不会进行通信,利用Etcd的强一致性,能够保证在分布式高并发情况下leader节点的全局唯一性。

当集群中的leader节点服务异常后,其它节点的服务会尝试更新自身为leader节点,当有多个节点同时更新endpoint时,由Etcd保证只有一个服务的更新请求能够成功。通过这种机制sheduler和controller-manager可以保证在leader节点宕机后其它的节点可以顺利选主,保证服务故障后快速恢复。

当集群中的网络出现故障时对服务的选主影响不是很大,因为scheduler和controller-manager是依赖Etcd进行选主的,在网络故障后,可以和Etcd通信的主机依然可以按照之前的逻辑进行选主,就算集群被切分,Etcd也可以保证同一时刻只有一个节点的服务处于leader状态。

查看kube-controller-manager当前的 leader 1 2 3 4 5 6 7 8 9 10 11 12 13 14 [root@k8s-m1 ~]apiVersion: v1 kind: Endpoints metadata: "holderIdentity" :"m7-autocv-gpu02_084534e2-6cc4-11e8-a418-5254001f5b65" ,"leaseDurationSeconds" :15,"acquireTime" :"2018-06-10T15:40:33Z" ,"renewTime" :"2018-06-10T16:19:08Z" ,"leaderTransitions" :12}'"4540"

查看kube-scheduler当前的 leader 1 2 3 4 5 6 7 8 9 10 11 12 13 14 [root@k8s-m1 ~]apiVersion: v1 kind: Endpoints metadata: "holderIdentity" :"m7-autocv-gpu01_7295c239-f2e9-11e8-8b5d-0cc47a2afc6a" ,"leaseDurationSeconds" :15,"acquireTime" :"2018-11-28T08:41:50Z" ,"renewTime" :"2018-11-28T08:42:08Z" ,"leaderTransitions" :0}'"1013"

随便找一个或两个 master 节点,停掉kube-scheduler或者kube-controller-manager服务,看其它节点是否获取了 leader 权限(systemd 日志)

kube-apiserver高可用

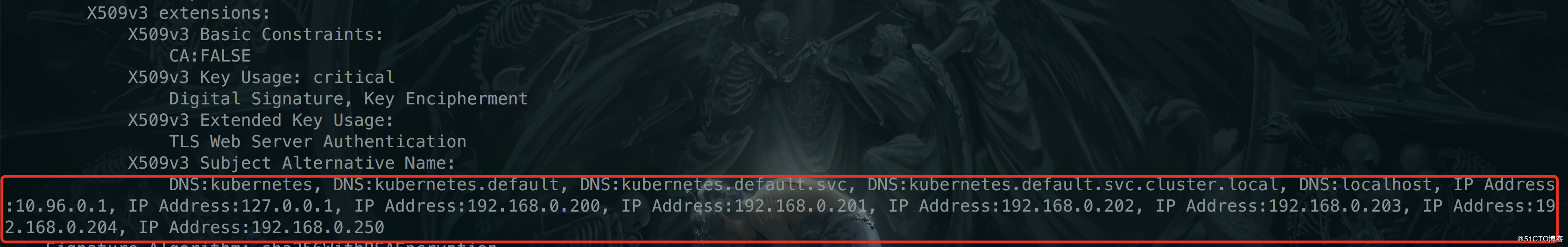

上面我们说到了kube-controller-manager和kube-scheduler的工作机制,现在我们进入正题讲讲如何做kube-apiserver高可用,这里我采用haproxy+keepalived的方式来做高可用(注意:我们在生成证书的时候一定要把VIP地址加入进证书)

1.1、三台master都安装haproxy+keepalived haproxy配置文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 global monitor-in monitor-uri /monitor check check-ssl verify none check check-ssl verify none check check-ssl verify none

keepalived配置文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 global_defs {user root"/bin/bash /etc/keepalived/check_haproxy.sh" 3 2 10 2 state BACKUP101 47 3 192.168 .0.200 192.168 .0.201 192.168 .0.202 192.168 .0.250 /24

keeaplived这里需要注意,默认keepalived是采用的组播方式,加上unicast_peer参数后是单播方式,三台keepalived配置文件不一样unicast_src_ipc参数写当前节点IP,unicast_peer参数写另外两个节点IP地址。其他的权重和BACKUP都保持一致

keepalived 健康检查脚本 1 2 3 4 5 6 7 8 9 10 11 12 13 cat <<'EOF' > /etc/keepalived/ha_check.sh192.168.0.250 error Exit() {echo "*** $*" 1 >&2 1 $VIRTUAL_IP ; then 2 --insecure https://${VIRTUAL_IP} :8443/ -o /dev/null || error Exit "Error GET https://${VIRTUAL_IP} :8443/"

到此kube-apiserver高可用就搭建完成,在所有连接apiserver中的地址都填写这个VIP地址尤其是kubectl客服端中连接kubernetes集群的地址。