配置Etcd环境变量 在/etc/profile.d目录下创建etcd.sh文件将如下内容写入。

1 2 3 4 5 6 7 8 9 10 11 alias etcd_v2='etcdctl --cert-file /etc/kubernetes/pki/etcd/healthcheck-client.crt \--key-file /etc/kubernetes/pki/etcd/healthcheck-client.key \--ca-file /etc/kubernetes/pki/etcd/ca.crt \--endpoints https://10.15.1.3 :2379 ,https://10.15.1.4 :2379 ,https://10.15.1.5 :2379 'alias etcd_v3='ETCDCTL_API=3 \--cert /etc/kubernetes/pki/etcd/healthcheck-client.crt \--key /etc/kubernetes/pki/etcd/healthcheck-client.key \--cacert /etc/kubernetes/pki/etcd/ca.crt \--endpoints https://10.15.1.3 :2379 ,https://10.15.1.4 :2379 ,https://10.15.1.5 :2379 '

使用命令查看Etcd API版本 1 2 3 [root@k8s -m1 profile.d]# etcd_v3 version version : 3.2 .24 version : 3.2

Etcd备份 1 2 [root@k8s-m1 overlord]# etcd_v3 snapshot save test .db test .db

Etcd还原 停止etcd(停止所有的Etcd服务) 1 [root@k8s -m1 overlord]# systemctl stop etcd

删除etcd数据目录 1 [root@k8s -m1 overlord]# rm -rf /var/lib/etcd

分发备份文件 1 2 3 [root@k8s-m1 overlord] # scp test.db overlord@ 10.15 .1 .3 :/home/overlord/[root@k8s-m1 overlord] # scp test.db overlord@ 10.15 .1 .4 :/home/overlord/[root@k8s-m1 overlord] # scp test.db overlord@ 10.15 .1 .5 :/home/overlord/

恢复备份(在所有Etcd主机执行) 1 2 3 4 5 ETCDCTL_API=3 etcdctl \// 10.15 .1.3 :2380 \// 10.15 .1.3 :2380 ,k8s-m2=https:// 10.15 .1.4 :2380 ,k8s-m3=https:// 10.15 .1.5 :2380 \/root/ test.db \/var/ lib/etcd/

1 2 3 4 5 ETCDCTL_API=3 etcdctl \// 10.15 .1.4 :2380 \// 10.15 .1.3 :2380 ,k8s-m2=https:// 10.15 .1.4 :2380 ,k8s-m3=https:// 10.15 .1.5 :2380 \/root/ test.db \/var/ lib/etcd/

1 2 3 4 5 ETCDCTL_API=3 etcdctl \// 10.15 .1.5 :2380 \// 10.15 .1.3 :2380 ,k8s-m2=https:// 10.15 .1.4 :2380 ,k8s-m3=https:// 10.15 .1.5 :2380 \/root/ test.db \/var/ lib/etcd/

启动Etcd服务 查询Etcd状态 1 2 3 4 5 6 7 8 [root@k8s-m1 lib] ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | RAFT TERM | RAFT INDEX | https://10.15.1.3:2379 | daad158099a5cdca | 3.2.24 | 9.7 MB | true | 2 | 218 | https://10.15.1.4:2379 | 2717bbfe0f23b647 | 3.2.24 | 9.7 MB | false | 2 | 218 | https://10.15.1.5:2379 | 81183085587ceed2 | 3.2.24 | 9.7 MB | false | 2 | 218 |

查询Kubernetes集群是否可用 1 2 3 4 5 6 7 [root@k8s-m1 lib]VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIMEmaster 112d v1.13.5 10.15 .1.3 <none> CentOS Linux 7 (Core) 3.10 .0 -514 .el7.x86_64 docker://18.6 .3 master 112d v1.13.5 10.15 .1.4 <none> CentOS Linux 7 (Core) 3.10 .0 -514 .el7.x86_64 docker://18.6 .3 master 112d v1.13.5 10.15 .1.5 <none> CentOS Linux 7 (Core) 3.10 .0 -514 .el7.x86_64 docker://18.6 .3 node 112d v1.13.5 10.15 .1.6 <none> CentOS Linux 7 (Core) 3.10 .0 -514 .el7.x86_64 docker://18.6 .3 node 112d v1.13.5 10.15 .1.7 <none> CentOS Linux 7 (Core) 3.10 .0 -514 .el7.x86_64 docker://18.6 .3

Etcd数据压缩 etcd默认不会自动进行数据压缩,etcd保存了keys的历史信息,数据频繁的改动会导致数据版本越来越多,相对应的数据库就会越来越大。etcd数据库大小默认2GB,当在etcd出现以下日志时,说明数据库空间占满,需要进行数据压缩腾出空间。

1 Error from server: etcdserver: mvcc: database space exceeded

获取历史版本号:(在Etcd主机执行以下命令) 1 [root @k8s -m1 overlord ] # ver=$(ETCDCTL_API=3 etcdctl --write -out ="json" endpoint status | egrep -o '"revision" :[0-9]* ' | egrep -o '[0-9].* ')

压缩旧版本(在所有Etcd主机执行) 1 [root@k8s-m1 overlord]# ETCDCTL_API =3 etcdctl compact $ver

清理碎片(在所有Etcd主机执行) 1 [root@k8s -m1 overlord]# ETCDCTL_API=3 etcdctl defrag

查看下压缩后的大小 1 2 3 4 5 6 7 8 [root@k8s-m1 overlord] ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | RAFT TERM | RAFT INDEX | https://10.15.1.3:2379 | daad158099a5cdca | 3.2.24 | 4.1 MB | true | 2 | 9044 | https://10.15.1.4:2379 | 2717bbfe0f23b647 | 3.2.24 | 5.6 MB | false | 2 | 9044 | https://10.15.1.5:2379 | 81183085587ceed2 | 3.2.24 | 5.5 MB | false | 2 | 9044 |

忽略etcd告警 通过执行ETCDCTL_API=3 etcdctl alarm list可以查看etcd的告警情况,如果存在告警,即使释放了etcd空间,etcd也处于只读状态。在确定以上的操作均执行完毕后在任意一个etcd主机中执行以下命令忽略告警:

1 [root@k8s -m1 overlord]# ETCDCTL_API=3 etcdctl alarm disarm

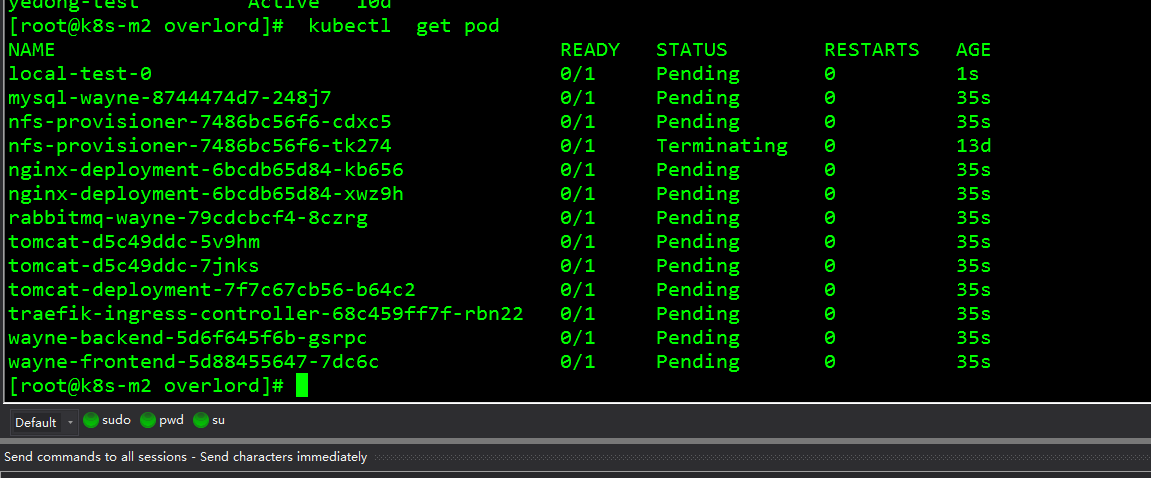

故障排查 在本实验中还原后可能会出现如下故障,所有Pod在删除后重新创建全部为Pengding状态。

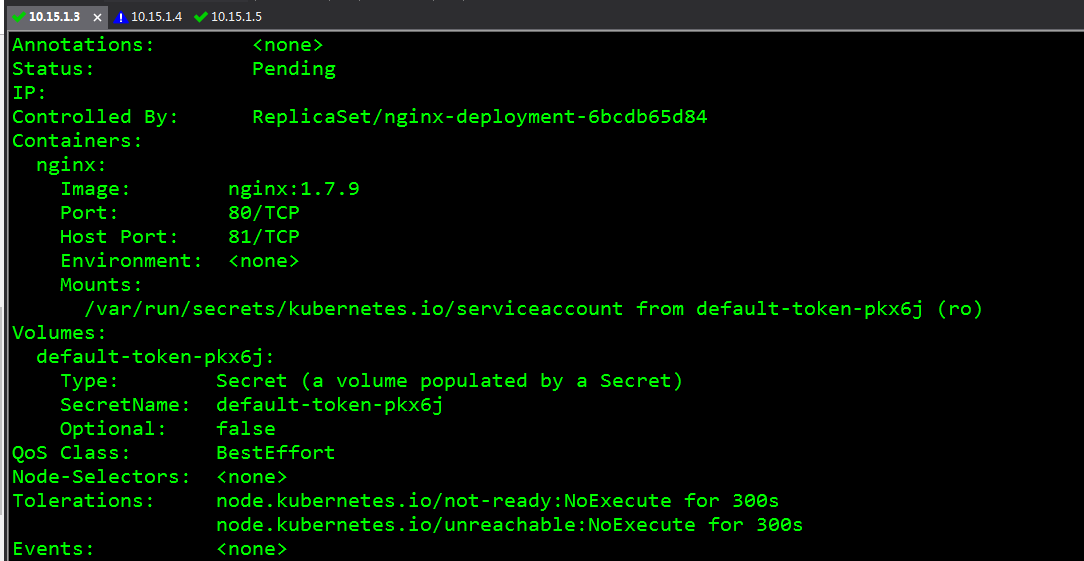

通过查看describe pod看到Events并没有任何事件。

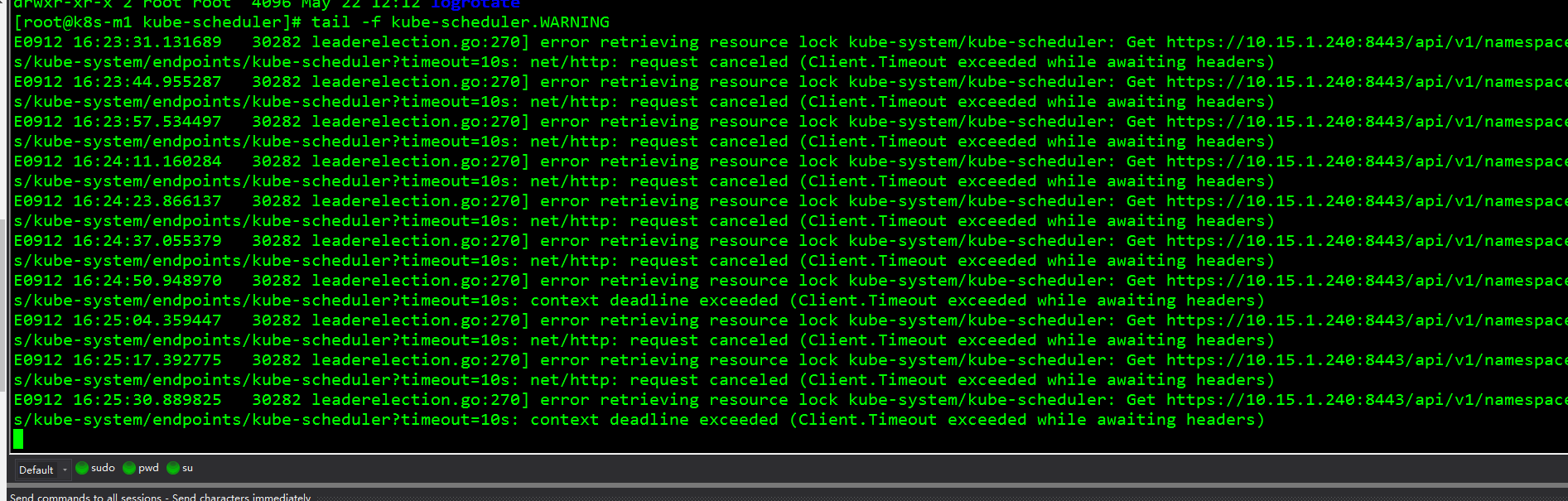

查看scheduler日志信息发现连接api server报错

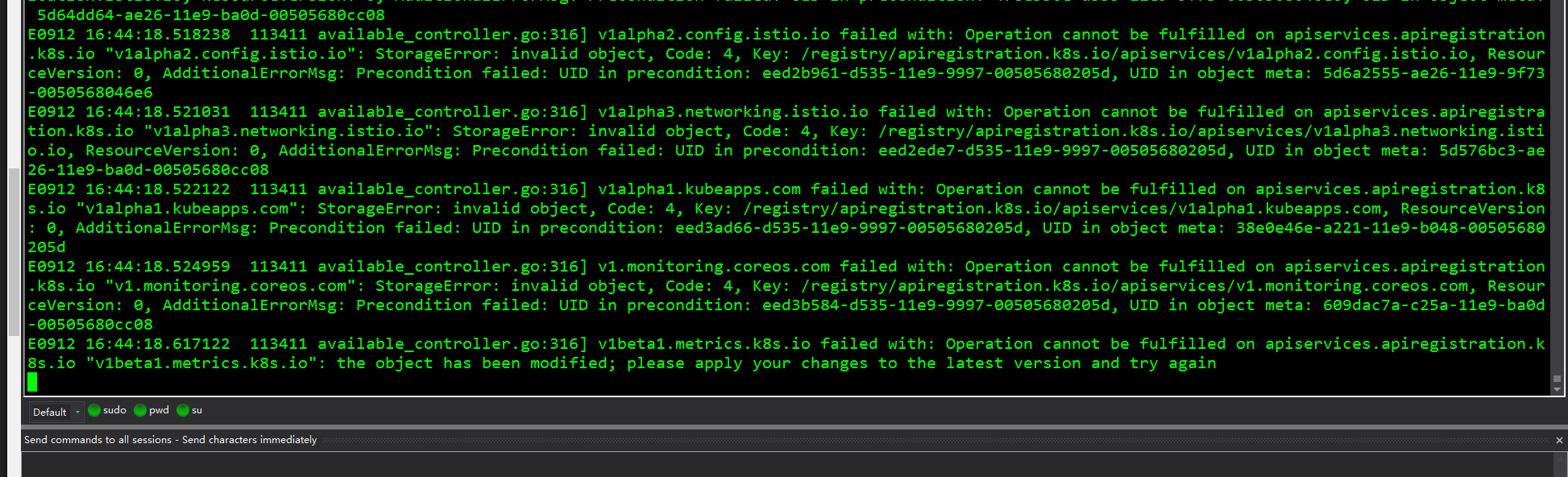

查看api server日志发现报如下错误the object has been modified,please apply your change to the latest versionand try again 删除metrics server API server即可恢复,此时执行kubectl api-resources直接hang住无任何反应。

Namespaces名称空间无法删除 1 2 3 4 5 6 7 8 9 10 11 12 [root@k8s-m1 ~]# kubectl get ns29d 29d test Active 14d 79d default Active 118d 39d 78d test Active 104d test Active 35d 54d

开启api server HTTP代理 1 2 [root@k8s-m1 ~]to serve on 127.0 .0.1 :8001

克隆终端并在终端中声明一个变量 1 [root@k8s -m1 overlord]# NAMESPACE=cattle-system

将无法删除的namespaces以Json格式输出 1 kubectl get namespace $NAMESPACE -o json |jq '.spec = {"finalizers":[]}' >temp.json

编辑temp.json文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 {"apiVersion" : "v1" ,"kind" : "Namespace" ,"metadata" : {"annotations" : {"cattle.io/status" : "{\" Conditions\" :[{\" Type\" :\" ResourceQuotaInit\" ,\" Status\" :\" True\" ,\" Message\" :\" \" ,\" LastUpdateTime\" :\" 2019-08-19T08:21:42Z\" },{\" Type\" :\" InitialRolesPopulated\" ,\" Status\" :\" True\" ,\" Message\" :\" \" ,\" LastUpdateTime\" :\" 2019-08-19T08:21:47Z\" }]}" ,"field.cattle.io/projectId" : "c-zcf9v:p-lwgnw" ,"kubectl.kubernetes.io/last-applied-configuration" : "{\" apiVersion\" :\" v1\" ,\" kind\" :\" Namespace\" ,\" metadata\" :{\" annotations\" :{},\" name\" :\" cattle-system\" }}\n " ,"lifecycle.cattle.io/create.namespace-auth" : "true" "creationTimestamp" : "2019-08-19T08:21:31Z" ,"deletionGracePeriodSeconds" : 0 ,"deletionTimestamp" : "2019-09-17T10:15:32Z" ,"finalizers" : ["controller.cattle.io/namespace-auth" "labels" : {"field.cattle.io/projectId" : "p-lwgnw" "name" : "cattle-system" ,"resourceVersion" : "16595026" ,"selfLink" : "/api/v1/namespaces/cattle-system" ,"uid" : "59412a0d-c25a-11e9-ba0d-00505680cc08" "spec" : {"finalizers" : []"status" : {"phase" : "Terminating"

删除:"resourceVersion": "16595026",整行内容,删除:"finalizers" []中的内容。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 {"apiVersion" : "v1" ,"kind" : "Namespace" ,"metadata" : {"annotations" : {"cattle.io/status" : "{\" Conditions\" :[{\" Type\" :\" ResourceQuotaInit\" ,\" Status\" :\" True\" ,\" Message\" :\" \" ,\" LastUpdateTime\" :\" 2019-08-19T08:21:42Z\" },{\" Type\" :\" InitialRolesPopulated\" ,\" Status\" :\" True\" ,\" Message\" :\" \" ,\" LastUpdateTime\" :\" 2019-08-19T08:21:47Z\" }]}" ,"field.cattle.io/projectId" : "c-zcf9v:p-lwgnw" ,"kubectl.kubernetes.io/last-applied-configuration" : "{\" apiVersion\" :\" v1\" ,\" kind\" :\" Namespace\" ,\" metadata\" :{\" annotations\" :{},\" name\" :\" cattle-system\" }}\n " ,"lifecycle.cattle.io/create.namespace-auth" : "true" "creationTimestamp" : "2019-08-19T08:21:31Z" ,"deletionGracePeriodSeconds" : 0 ,"deletionTimestamp" : "2019-09-17T10:15:32Z" ,"finalizers" : [],"labels" : {"field.cattle.io/projectId" : "p-lwgnw" "name" : "cattle-system" ,"selfLink" : "/api/v1/namespaces/cattle-system" ,"uid" : "59412a0d-c25a-11e9-ba0d-00505680cc08" "spec" : {"finalizers" : []"status" : {"phase" : "Terminating"

执行如下操作 1 curl -k -H "Content-Type: application/json" -X PUT --data-binary @temp.json 127.0 .0.1 :8001 /api/ v1/namespaces/ $NAMESPACE /finalize

执行kubectl查看结果 1 2 3 4 5 6 7 8 9 10 11 12 13 [root@k8s-m1 overlord]#kubectl get ns29d test Active 14d 79d default Active 118d 39d 78d test Active 104d test Active 35d 54d public Active 118d 118d

若以上方法无法删除,可使用第二种方法,直接从ETCD中删除源数据 1 2 3 4 5 3 etcdctl del /registry/ pods/default/ pod-to-be-deleted-0 3 etcdctl del /registry/ namespaces/NAMESPACENAME